Ryan Haines / Android Authority

TL;DR

- Google has released Gemini 2.5 Flash, a new lightweight AI model with improved reasoning.

- It’s the first Gemini model to offer adjustable thinking settings to balance cost, speed, and quality.

- It’s available now in preview via the Gemini API, AI Studio, and Vertex AI. It’s also available in the Gemini app.

Just when you thought you’d got your head around all the Gemini models, Google goes and adds another one to the list. The company has announced that Gemini 2.5 Flash is now available in preview via the Gemini API, with access through both Google AI Studio and Vertex AI.

According to Google, the new model builds on Gemini 2.0 Flash, retaining its speed and low cost while making a “major upgrade” to reasoning capabilities. It’s described as the company’s first fully hybrid reasoning model, allowing developers to toggle thinking on or off and set a thinking budget, effectively controlling how smart the model gets on a per-query basis.

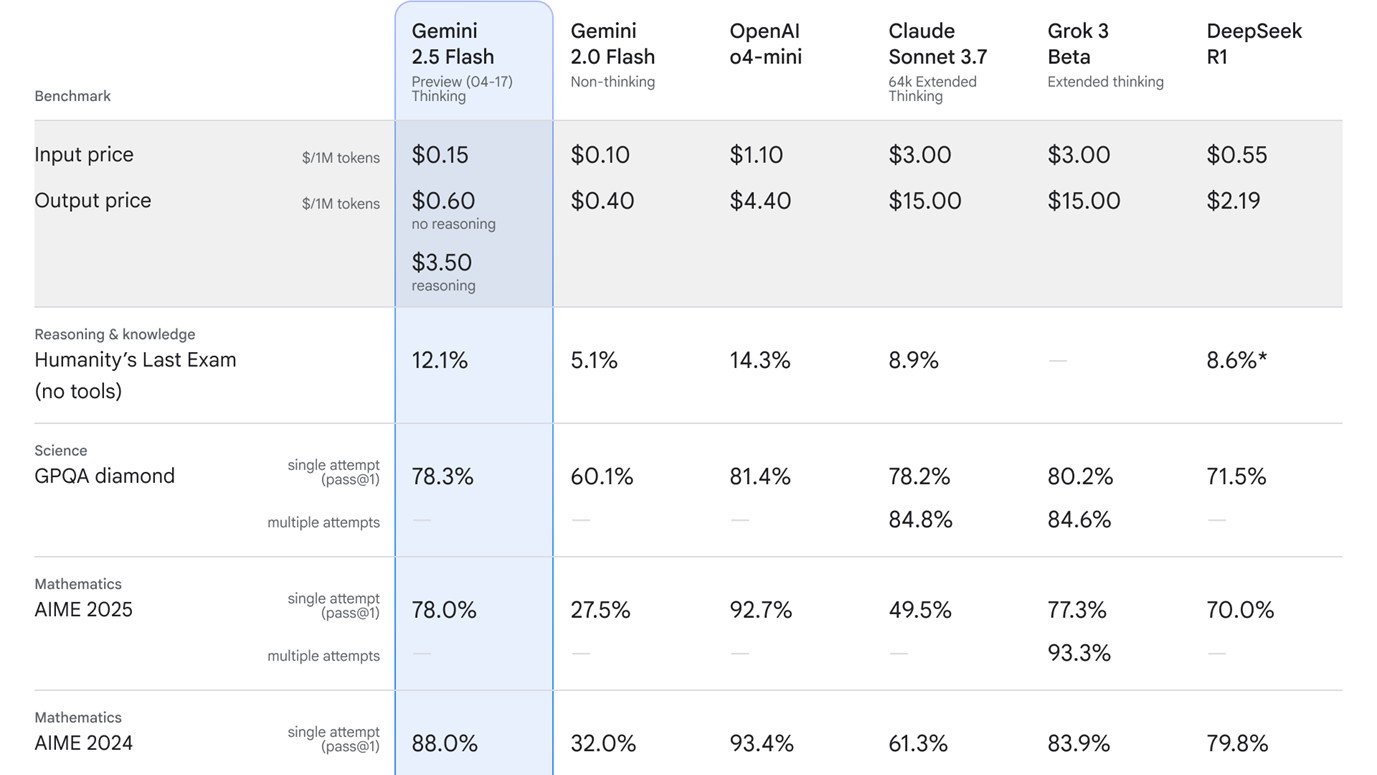

Google shared some benchmark results to demonstrate Gemini 2.5 Flash’s versatility, particularly for tasks involving things like math, science, reasoning, and code generation. While it doesn’t outperform rival AI models in every area, it offers strong performance for the price, with Google calling it a “Pareto frontier” model. This means it delivers the best possible trade-off between speed, cost, and quality without sacrificing one to improve another. You can see the full benchmark breakdown in the official blog post.

Gemini 2.5 Flash is also available in the Gemini app, where users can try it alongside new features like Canvas — a collaborative space for refining text and code.

This is the latest in a fast-growing family of Gemini models. Google launched Gemini 1.5 Pro, followed by Gemini 1.5 Flash last year. More recently, Gemini 2.0 Flash and the lightweight 2.0 Flash-Lite were released in February 2025. Google clearly isn’t afraid of giving developers plenty of options, and today’s launch only adds to the menu.