Apple’s latest AI models could lead to new Apple Vision Pro and Apple Intelligence features.

Apple has published two new research papers on its Machine Learning blog, detailing an AI model for improved photogrammetry and another that acts as a video-related personal assistant.

The iPhone maker has long been interested in machine learning, which evolved into its version of AI. The company has been publishing research papers publicly to showcase its advancements in future technologies.

Apple Intelligence offers users access to new applications such as Image Playground, AI-generated Smart Replies in the Mail app, email and notification summaries, a new Writing Tools framework, and much more.

Apple remains focused on artificial intelligence research, and two newly published papers offer insight about the direction future AI features might take. Specifically, the company documented two AI models, known as Matrix3D and StreamBridge, on its Machine Learning Blog.

Matrix 3D allows for improved photogrammetry

Apple says Matrix3D is an all-in-one Large Photogrammetry Model, meaning that it streamlines and reduces the requirements for creating three-dimensional objects from 2D images. It can create 3D objects and environments from only a few images, as shown in Apple’s example videos.

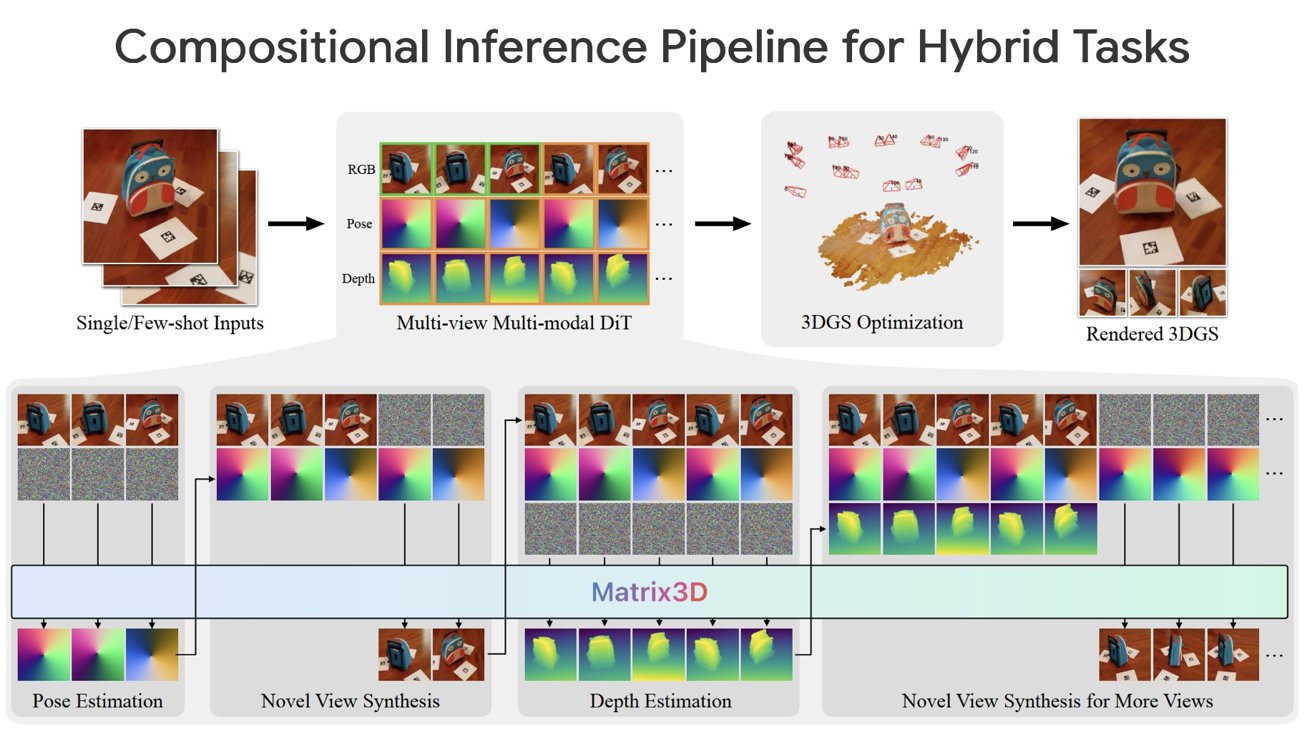

Apple’s Matrix3D model steamlines the photogrammetry process. Image Credit: Apple’s Machine Learning Blog.

Photogrammetry as a whole is hardly a new concept, and it’s been used across various industries such as game development. Apple’s implementation via Matrix3D, however, simplifies what was once a multi-step endeavor, eliminating errors in the process.

Unlike the traditional approach to photogrammetry, where each sub-process is treated as an independent step that requires a specific algorithm, Apple’s new AI model performs all necessary tasks. It handles processes such as depth and pose estimation, along with novel view synthesis through the use of a unified architecture, allowing for improved accuracy.

The company’s Matrix3D model was trained through a technique known as a masked learning strategy. In essence, this means the model was trained on partially complete image depth and pose data, which effectively required it to “fill in the blanks” to achieve the desired result.

In its research paper, Apple notes that the traditional photogrammetry approach “typically requires a dense collection of images, often hundreds, to achieve robust and accurate 3D reconstruction, which can be troublesome in practical applications.” The Matrix3D model, meanwhile, only needs two or three images for the same output, greatly reducing the requirements for photogrammetry.

Apple already converts 2D images to 3D on Apple Vision Pro. It is an action that can be performed on any image, even one without Portrait Mode depth data.

The other artificial intelligence model revealed by Apple has more to do with videos than images.

StreamBridge acts as a “proactive streaming assistant”

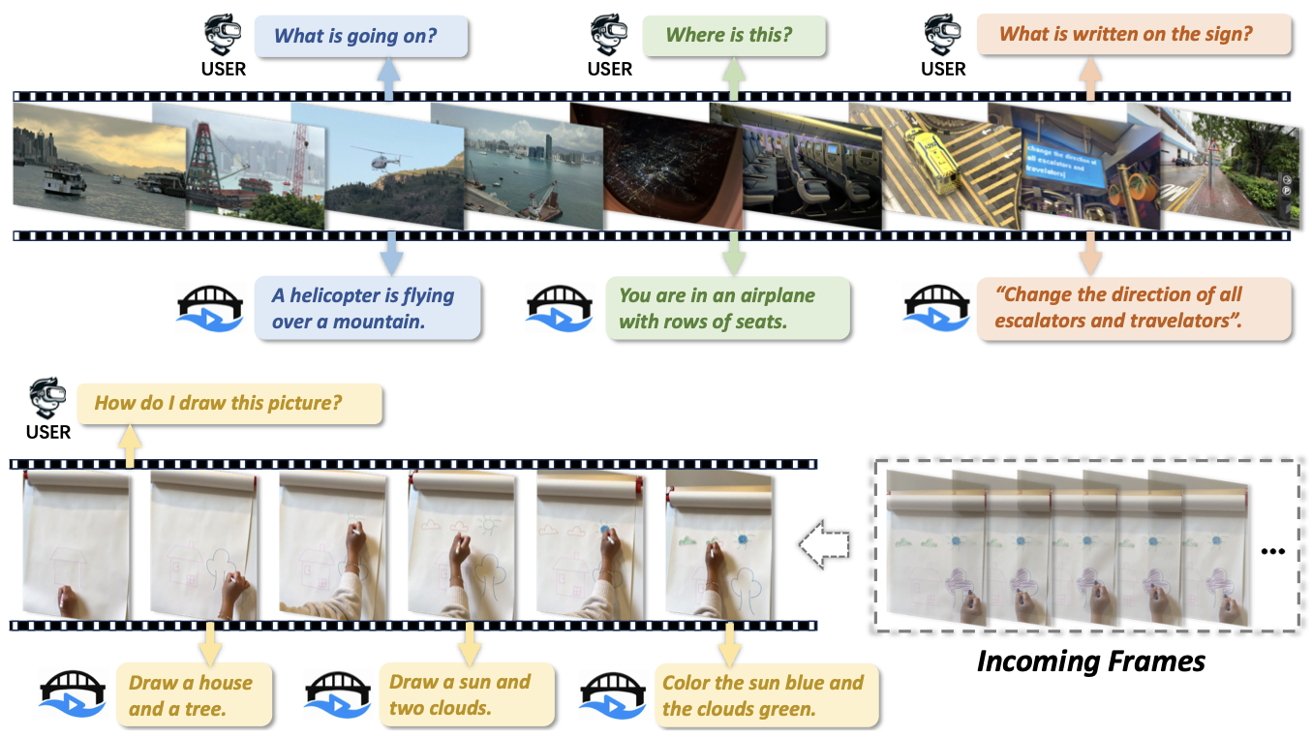

Apple’s research paper on StreamBridge says it’s a framework that transforms “video-LLMs into streaming-capable models.” While some AI models process video input by processing pre-recorded video files in their entirety, Apple’s StreamBridge model is capable of offering “multi-turn real-time understanding” and “proactive response generation.”

Apple’s StreamBridge model acts as video-capable assistant. Image Credit: Apple’s Machine Learning Blog.

What this means is that StreamBridge can answer different questions about a video in real-time. Apple’s example includes questions about the events of a video, the location, along with a question about a specific object featured in the input video.

StreamBridge can also offer instructions without being asked, as “the model actively monitors the visual stream and generates timely outputs based on unfolding content.” The example provided by Apple shows its AI model giving a user “step-by-step guidance as the drawing progresses without being explicitly asked, simulating continuous support in dynamic environments.”

Other tech companies have released video AI tools of their own, which also aim to offer instructions based on video input.

During its annual Google I/O developer conference in May 2024, Google showcased an interesting use case for artificial intelligence — where users could ask a question in video form and receive an AI-generated response or suggestion.

As part of the event, Google’s AI was shown a video of a broken record player and asked why it wasn’t working. The software identified the model of the record player and suggested that the record player may not be properly balanced, and that it did not work because of this.

What could Apple’s new AI models lead to?

Apple’s StreamBridge model could take this concept one step further, as it can process video streams and offer step-by-step instructions based on shifting input, rather than one-sentence answers based on video recordings.

Though no such feature has yet been unveiled, this is perhaps something that we could see implemented as a future Apple Intelligence update, possibly through Siri or the Camera application.

As for Matrix3D, the company’s photogrammetry model, it’s safe to assume that it could lead to new, more powerful features for the Apple Vision Pro and successor products, which are already said to be in the works.

-xl.jpg?w=696&resize=696,0&ssl=1)