Quantum computing is an emerging area of computer science focused on building computers based on the principles of quantum theory to dramatically increase computation speeds. It aims to use the unique behaviors of quantum physics to solve problems that are too complex for classical computing and to provide massive performance gains in existing high-end applications. To do so, specialized quantum computing hardware and algorithms take advantage of quantum properties, such as superposition and entanglement.

Quantum computers mark a potential leap forward in computing capability for certain applications. For example, quantum computing promises substantial advantages for tasks such as simulation, optimization and integer factorization. If the technology delivers on that promise, it could have a significant impact on IT investments in various industries, including pharmaceuticals, healthcare, manufacturing, cybersecurity and finance.

Numerous labs, government agencies, universities and vendors around the world are developing quantum computing technology. The effort includes tech giants, such as Amazon, Google, IBM, Intel and Microsoft; various quantum startups; research universities, including Massachusetts Institute of Technology, Harvard and Oxford University; several national laboratories in the U.S.; the National Institute of Standards and Technology; and many other organizations.

However, most experts estimate it could take several years and possibly decades before widespread use of quantum computing in business applications becomes a reality. Current quantum systems are extremely fragile, error-prone and expensive, and more research and development work is needed to make them reliable and affordable enough to be a practical alternative to classical computers.

Read this comprehensive guide for further details on what quantum computing is and an overview of how it works, its potential benefits, the technical challenges, quantum technologies and more. Click the links for more in-depth information in related articles.

How does quantum computing work?

Quantum computing takes advantage of how quantum matter works: Where classical computing uses binary bits — 1s and 0s — quantum computing uses particles such as electrons and photons that are given either a charge or polarization to act as a 0, 1 or any of the possible states in between. The ability of these units, called qubits, to be in more than one state at a time is what gives quantum computers much of their processing power.

Development of quantum theory, also called quantum mechanics, began in 1900 when German physicist Max Planck introduced the idea that energy and matter exist in individual units. Further developments by other scientists in the 30 years that followed have led to the modern understanding of quantum theory.

The elements of quantum theory include the following:

- Energy, like matter, consists of discrete units as opposed to continuous waves.

- Elementary particles of energy and matter can behave like particles or waves, depending on the conditions.

- The movement of elementary particles is inherently random and, thus, unpredictable.

- The simultaneous measurement of two complementary values — such as the position and momentum of a particle — is flawed. The more precisely one value is measured, the more flawed the measurement of the other value will be.

Features of quantum computing

Two principles of quantum mechanics — superposition and entanglement — play a key role in how qubits operate. They are the most important features of quantum computers, enabling complex calculations on huge amounts of data.

Here’s why they are so critical to quantum computing’s unique theoretical advantages.

Superposition

Superposition refers to qubits that are in all possible states at once until they are measured. Think of a qubit as an electron in a magnetic field. The electron’s spin might be either in alignment with the field, known as a spin-up state, or opposite to the field, known as a spin-down state. Changing the electron’s spin from one state to another is achieved by using a pulse of energy, such as from a laser. If only half a unit of laser energy is used, and the particle is isolated from external influences, it enters a superposition of states, behaving as if it were in every possible state simultaneously.

Since qubits can superpose 0 and 1 and the states in between, the number of computations a quantum computer can execute is 2N, where N is the number of qubits. A quantum computer comprised of 500 qubits has the potential to do 2500 calculations in a single step, exceeding what classical computers can do.

Entanglement

Entangled particles are pairs of qubits that exist in such a state that changing one qubit directly changes the other. Knowing the spin state of one entangled particle — up or down — reveals the spin of the other in the opposite direction. In addition, because of superposition, the spin state of the particle being measured is determined at the time of measurement and communicated to the connected particle, which simultaneously assumes the opposite spin direction.

Quantum entanglement enables qubits separated by large distances to interact with each other instantaneously. No matter how great the distance between particles, they remain entangled as long as they’re isolated.

A third principle, quantum interference, plays an important role in how superposition and entanglement can be harnessed to perform calculations. In quantum interference, subatomic particles interact with and influence each other and themselves. Particles in superposition can be made to interfere with each other to make desired probabilities more likely to occur when the state of the particles is measured.

Together, quantum superposition, entanglement and interference enable enhanced computing power because when more qubits are added, capacity increases exponentially.

However, today’s quantum computers operate at a much smaller scale than is theoretically possible, largely because their quantum properties are prone to collapse from interference by “noise,” such as vibration or radiation. Thus, the current stage of quantum technology is called noisy intermediate-scale quantum (NISQ) computing, a model for building computers with small numbers of qubits.

NISQ machines are useful for quantum experimentation and algorithm development and have done much to advance the technology. However, they lack the scale to exceed the computational capabilities and practicality of classical computers — a milestone called quantum advantage that is the holy grail of developers.

Quantum computers vs. classical computers and supercomputers

Beyond the aforementioned differences in physical and logical bits of quantum computers vs. classical computers, there’s another big differentiator that determines their practicality. Most classical computers are affordable, smaller than a breadbox and able to run in offices and other traditional business environments. Quantum computers, in contrast, are typically car-sized and extremely resource-intensive, and they require significant amounts of energy and cooling to run properly.

In fact, in most quantum computer architectures, the hardware primarily consists of cooling systems that keep the processor at extremely low temperatures. For example, IBM has used a refrigerator to cool a quantum system down to minus 459 degrees Fahrenheit.

Comparing quantum computers to supercomputers is mostly a matter of degree, since supercomputers use the same semiconductor circuitry and binary logic as classical computers. But they multiply those classical capabilities to such a degree that they can already do many of the calculations and simulations that quantum computers can only do in theory until their practical challenges are overcome.

Supercomputers consist of thousands or millions of classical processors that can perform calculations in parallel — another touted benefit of quantum computing — and at high speeds. They have long been used for tasks that require massive data processing, such as weather forecasting and molecular simulation.

However, the ability of quantum computers to process information in ways not possible on classical computers enables them to solve complex problems exponentially faster than supercomputers for certain use cases, such as cryptography, optimization and simulation. For example, Google’s Sycamore quantum processor purportedly takes six seconds to do calculations that would take the world’s fastest supercomputer, HPE’s El Capitan, several decades to complete.

Types of quantum technology

Several forms of quantum technology could have major implications for IT. They include the following.

Quantum processing

Currently, quantum processing is usually achieved through several different technologies:

- Quantum annealing. This process uses superconducting qubits and specializes in optimization problems.

- Superconducting quantum computing. Electronic circuits and microwave pulses manipulate the quantum states of qubits.

- Trapped ion computing. Charged particles are confined to form qubits, which are then manipulated with lasers.

- Neutral atom computing. Atoms with a zero electrical charge represent qubits, which are then manipulated with lasers. It is considered more scalable than other methods.

- Photonic computing. Using methods such as beam splitters, this process manipulates light to implement logic gates, without complex cooling requirements.

- Quantum dots. Nanoscale semiconductor crystals confine charged particles, such as electrons, and manipulate the spin states of the resulting qubits.

Quantum cryptography

Quantum cryptography is a method of encryption that exploits naturally occurring properties of quantum mechanics to secure and transmit data. It uses photons that represent binary bits to transmit data over fiber optic wire — which sounds like traditional binary communication. But thanks to quantum mechanics, the quantum properties of the photons can’t be observed without changing or disturbing them, and whole particles can’t be copied. The upshot is an eavesdropper can’t try to find the encryption key without alerting the sender and receiver.

But quantum computing can also be used to breach cybersecurity barriers. Organizations today use large, complex prime numbers to encrypt their data, which typically are too big for classical computers to decrypt. Quantum computers, in comparison, can factor extremely large numbers, meaning they can effectively break current forms of encryption.

This potential threat from quantum methods is the biggest impact of quantum computing on cryptography. Companies are already taking steps to safeguard their data and achieve crypto-agility since they won’t necessarily know when a quantum computer that can crack today’s cryptography methods has been used against them. Bad actors could secretly steal sensitive data without its owners knowing. That unpleasant reality has moved so-called post-quantum cryptography to the top of many organizations’ plans for quantum computing. Many are developing or looking to buy quantum-resistant algorithms.

Quantum sensing

Quantum sensing is a process for collecting data at the atomic level by using sensor technology that can detect changes in motion and electrical and magnetic fields. It has been used in magnetic resonance imaging for faster results and improvements in resolution.

Quantum networks

Quantum mechanics is also being tapped to develop new network infrastructure. In quantum networking, a series of linked quantum processing units (QPUs) exchange qubits while network nodes create entanglement that enables real-time data transmission. In addition, the no-cloning theorem of quantum mechanics — which states it’s impossible to make an exact copy of an unknown quantum state — means data on such networks is harder to steal. That’s the theory, at least, but quantum networking mostly exists in labs in small-scale networks for now.

Benefits of quantum computing

The potential advantages of quantum computing are significant but tend to be specialized. They include the following.

Speed

In theory, quantum computers will be incredibly fast compared to classical computers. For example, they have the potential to speed up modeling used in financial portfolio management, such as the Monte Carlo model for gauging the probability of outcomes and their associated risks.

Solving complex problems

Quantum computers are designed to perform many complex calculations simultaneously. This capability can be particularly useful in factorization, the mathematical method behind most of today’s encryption standards.

Simulation

Existing quantum computers can run complex simulations and are fast enough to simulate more intricate systems than classical computers. This has proved helpful in molecular simulations, which are essential to drug development.

Optimization

Quantum computing’s ability to process huge amounts of complex data is already helping to solve optimization problems in areas like manufacturing and supply chain management, where the challenge is to find the best use of available resources.

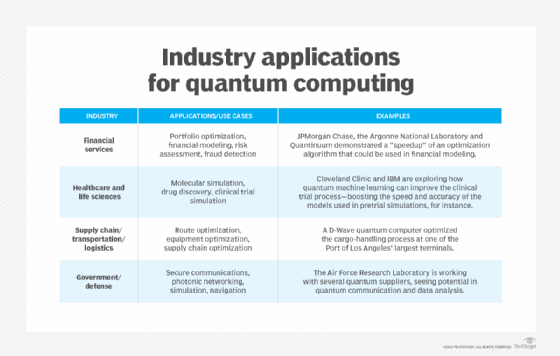

How companies could use quantum computers

Quantum computers have the potential to disrupt some areas where classical computers are used today, including the following fields.

AI and ML

Quantum computers can aid artificial intelligence (AI) and machine learning (ML) by improving the handling of complex, high-dimensional data; processing large data sets; and improving optimization, feature extraction and data representation. Quantum machine learning is a particular focus of research. Based on quantum neural networks, which use quantum features such as superposition and entanglement instead of the binary approach of current neural nets, it’s expected to speed up ML model training and be better at handling multidimensional data.

While research suggests quantum-powered AI could be faster and more accurate than AI on classical computers, it will have to overcome the usual hardware instability and error-correction issues of quantum technology to become practical.

Simulation

Quantum computers are already boosting the computational efficiency of simulating complex systems. For example, the technology is used in healthcare to simulate the behavior of molecules and interactions between drugs. Applications of quantum computing in finance also take advantage of simulation for important functions, such as portfolio management and fraud detection.

Optimizing business processes

Quantum computers could be used to improve research and development, supply chain optimization and production processes. Companies have already started using quantum computing in supply chain applications for route optimization, cargo loading and other such processes.

Cryptography

Quantum key distribution is one popular security application of quantum cryptography. Organizations are also starting to evaluate three post-quantum cryptography standards that NIST released in 2024.

Limitations and drawbacks of quantum computing

While the benefits of quantum computing are promising, there are still huge challenges to overcome, including the following.

External interference

The slightest disturbance in a quantum system can cause a quantum computation to collapse — a process known as decoherence. Quantum computers must be completely isolated from external interference during the computation phase. Some success has been achieved by using qubits in intense magnetic fields, but the short lifespan of qubits remains one of quantum computing’s great unsolved problems.

Error correction

Qubits aren’t digital bits of data and therefore can’t use conventional error correction. The procedure is critical in quantum computing, where even a single error in a calculation can cause the validity of the entire computation to collapse. There has been considerable progress in developing error-correction algorithms, but the technology isn’t practical to use in current quantum computers because of the large number of qubits it requires. Other efforts focus on error mitigation, a process for minimizing the effect of errors rather than trying to correct them. Proponents say it could enable higher qubit capacities than error correction, which requires redundant physical qubits for every logical qubit, and speed up development of practical quantum computers. Another strategy, error suppression, uses a variety of techniques to reduce errors at the hardware level, such as applying pulses of energy to qubits to keep them in a quantum state.

Data integrity

Retrieving output data after a quantum calculation is completed risks corrupting the data. Developments that rely on the distinct shape of the probability curve in quantum computers — such as database search algorithms — can avoid the issue and ensure that once calculations are performed, the act of measurement allows the quantum state to provide the correct answer.

Long-term storage of quantum information has also been a problem. However, recent breakthroughs have made certain forms of quantum computing storage practical.

Limited software

Quantum software is in short supply and tends to be unique to individual systems. This limits the interoperability of software across systems, making it less worthwhile to invest resources in software development and inhibiting market growth.

Skills gap

Quantum research skills are highly specialized, and most of the people who have them gained their experience working in labs and academia. As a result, there aren’t enough workers with quantum skills to take jobs in the growing commercial sector. More developers are learning to write quantum-inspired software for classical computers, and AI-assisted programming shows promise, but there’s still a huge need for quantum education and on-the-job training.

Examples of quantum computers

Among the companies building quantum computers are the following:

- D-Wave Quantum. Formerly named D-Wave Systems, the company introduced the first commercially available quantum computer in 2011. Its latest model, the D-Wave Advantage, uses quantum annealing and has a processor with more than 5,000 qubits.

- IBM. The tech giant released its first quantum computer, the Quantum System One, in 2019. Four years later, it introduced the Quantum System Two, which uses a modular architecture that IBM said will be the basis of its future efforts to boost quantum speed and capacity.

- IonQ. IonQ sells computers that use trapped-ion technology, starting with the Aria, introduced in 2022, and extending to several newer systems, each of which brought significant increases in qubit capacity.

- Quantum Computing Inc. QCI sells systems that use a photonic method to implement a new technology called entropy quantum computing in several generations of its Dirac model. The company claims EQC offers speed and coherence advantages over competing technologies.

- Quantinuum. Quantinuum sells two trapped-ion computers, the System Model H1 and H2. It is working to build a fault-tolerant quantum computer, named Apollo, by 2030, and recently announced a framework for training generative AI on H2 systems.

- Rigetti Computing. Rigetti builds superconducting quantum processors and systems. Its processor offerings range from the entry-level Novera to its latest system, the Ankaa-3, which achieves claimed accuracy rates exceeding 99%.

- Xanadu Quantum Technologies. Another vendor choosing a photonic approach, Xanadu claims its X-Series machines can handle random-sampling problems not solvable on classical computers.

Other quantum computer manufacturers include Atom Computing, Diraq, Oxford Ionics, Pascal, PsiQuantum, QuEra Computing and Silicon Quantum Computing.

Much of the work toward building commercial quantum computers focuses on developing QPUs rather than the surrounding infrastructure. Some of the biggest players in quantum computing, including AWS, Google, Intel and Microsoft, are QPU developers.

For example, in late 2024, Google unveiled a 105-qubit QPU called Willow that the company claimed beat a supercomputer in a standard benchmark and exhibited groundbreaking error-correction capabilities that improve exponentially when qubits are added. Google Willow was touted as a breakthrough in practical quantum computing, though real-world applications that exceed what’s possible with classical computers are only in the experimental stage.

Not to be outdone, Microsoft responded in February 2025 with the Majorana 1, the first QPU to employ a superconductivity approach called topological qubits, which the company claimed can use a new state of matter to produce highly scalable qubits. The same month, AWS announced its first QPU, Ocelot, which has hardware features designed to make error correction more scalable.

AWS, Google and Microsoft are among the several QPU developers that offer access to their products through cloud services.

Cloud-based quantum computing

Although the idea of using quantum computers can be exciting, they currently cost millions of dollars, so it’s unlikely most organizations will buy or build one. Instead, they’re likely to use cloud-based services, often referred to as quantum as a service (QaaS), that let users access quantum computing power over the internet.

Many of these services are offered directly by the same vendors that build quantum computers, including D-Wave, IBM, Quantinuum, Rigetti and Xanadu. Some QPU developers besides AWS, Google Cloud and Microsoft Azure do the same but often partner with the hyperscalers to deliver their QaaS offering.

In addition to offering remote access to quantum hardware, QaaS provides benefits such as cost efficiency, scalability and integration with classical computing systems.

Can you emulate quantum computers?

Quantum computers can be emulated on classical computers, but the results are slow and impractical for normal use. The vectors needed to simulate the movement and size of a qubit can be quite large, and performing a single computational step requires multiplying every vector. Because of this, classical computers can only effectively simulate quantum computers up to a point. A quantum program with only a few qubits can be simulated, but anything above approximately 50 qubits requires a real quantum computer.

However, quantum simulators — software programs that enable classical computers to emulate and run quantum circuits as if they were on a quantum computer — exist to play a supporting role. They can provide faster feedback to developers programming quantum algorithms.

Because observing the process changes the outcome, actual quantum systems are hard to debug. Quantum simulators let developers observe computations while they’re being run, which speeds up the debugging process. Simulators are also less expensive to run during development than real quantum systems.

Future of quantum computing

The current state of quantum computing is a confusing mix of competing claims of quantum advantage and a fragmented market consisting of QPU, system and software developers just learning to cooperate with each other. Case studies of practical applications come along frequently, but they tend to be in a small number of industries — such as financial services and healthcare — and are narrowly focused on the few problems that quantum computers currently excel at. Quantum algorithm development is another fast-emerging approach that seeks to program quantum methods on classical computers; however, it lacks the unique computational and data-processing capabilities enabled by quantum mechanics.

IT industry analysts generally paint a picture of a near-term quantum future that will see QaaS providing most customers’ initial access to quantum computers, along with hybrid products that combine the strengths of classical and quantum computers.

Meanwhile, quantum engineers are working diligently to increase qubit capacity and reliability. The pace at which vendors release new quantum systems and processors is picking up.

But when will quantum computing truly be widely available? Opinions vary, but most estimates fall within a range of five years at the low end and two to three decades at the high end. If that day finally arrives, glimmers like today’s breakthroughs in optimization, simulation, AI and encryption could become normal parts of daily life.

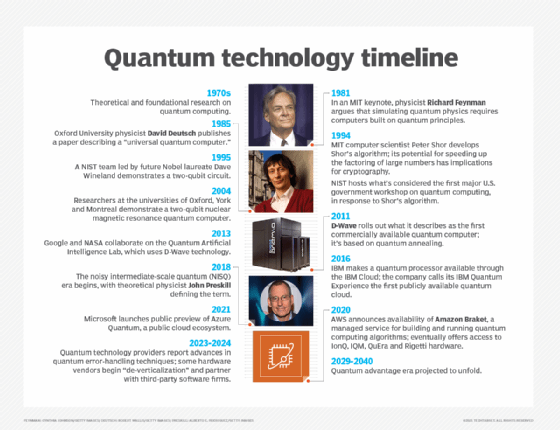

History of quantum computing

The history of quantum computers starts in the early 1900s when scientists, including Max Planck, Niels Bohr and Werner Heisenberg, laid the theoretical foundations of quantum mechanics, a new science for explaining the behavior of matter and light at the atomic and subatomic levels.

Computer applications of quantum mechanics didn’t arrive until the early 1980s, when physicist Richard Feynman asserted that only computers based on quantum principles could simulate quantum mechanics and that they could also handle calculations not possible on classical computers.

In 1985, physicist David Deutsch published a paper outlining a universal quantum computer, but it took nearly two decades for researchers to build a basic quantum system. In 2011, D-Wave introduced what it claimed was the first commercial quantum computer. In the decade that followed, major names such as Google, NASA, IBM, Microsoft and AWS released key components, such as quantum processors and cloud services.

Meanwhile, work continued on thorny practical problems, such as quantum error correction and scalability, which have yet to be solved. A key milestone was reached in 2018 when theoretical physicist John Preskill defined NISQ.

David Essex is an industry editor who covers enterprise applications, emerging technology and market trends, and creates in-depth content for several Informa TechTarget websites.

Alexander S. Gillis is a technical writer for WhatIs. He holds a bachelor’s degree in professional writing from Fitchburg State University.

This was last updated in April 2025

Continue Reading About What is quantum computing? How it works and examples